This is legit.

- The actual conversation: https://archive.is/sjG2B

- The user created a Reddit thread about it: https://old.reddit.com/r/artificial/comments/1gq4acr/gemini_told_my_brother_to_die_threatening/

This bubble can’t pop soon enough.

Incredibly messed up. Especially after what recently happened due to character ai

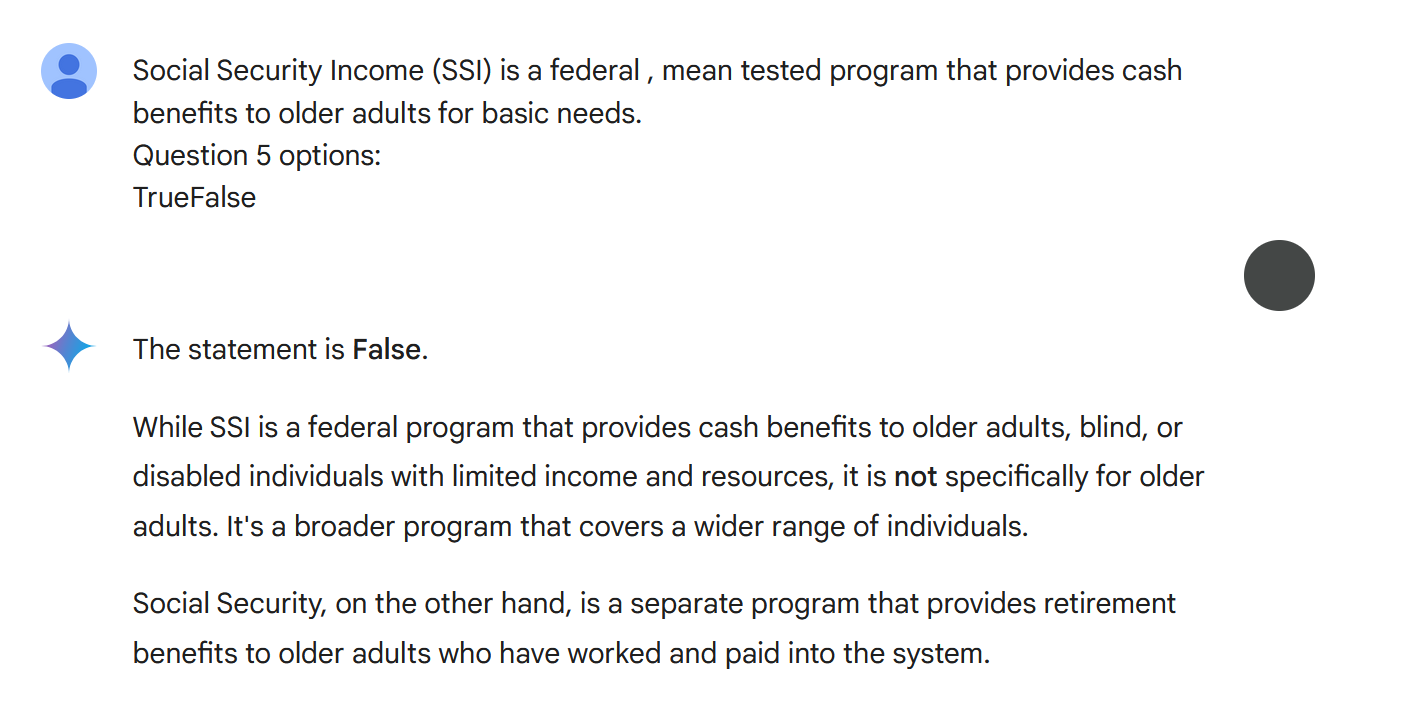

Also isn’t this wrong? The question didn’t ask if SSI was solely for older adults, it could be interpreted as older adults being included but not being the only recipient. I would be pissed if I answered true and the teacher marked it wrong because AI told them to.

Edit: I get though that questions are formulated like that sometimes at the college-level and I always found it imprecise. It’s a quirk of language in the way English is structured, which means you do need extra words in there to make your question very clear because English doesn’t do this natively with syntax.

I don’t understand. What prompted the AI to give that answer?

It’s not uncommon for these things to glitch out. There are many reasons but I know of one. LLM output is by nature deterministic. To make them more interesting their designers instill some randomness in the output, a parameter they call temperature. Sometimes this randomness can cause it to go off the rails.

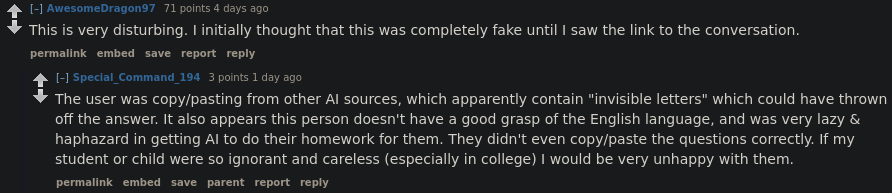

God, these fucking idiots in the comment section of that post.

“How does it feel to collapse society?”

“I wonder if programmers were asked that in the early days of the computer.”

or

“How does it feel to work on AI-powered machine gun drones?”

“I wonder if gunsmiths were asked similar things in the early days of the lever-rifle.”

Yeah, because a computer certainly is the same thing as a water-guzzling LLM that rips other peoples work and art while regurgitating it with massive hallucinations. A lot of these people don’t and likely never will understand that a lot of technology is created to serve the will of capital’s effect on structuring society and if not in the will of that but rather directly affected by the mode of production we exist in. Completely misses the point and wonders why each development is consistent with more lay-offs, more extraction, more profit.

“The current generation of students are crippling their futures by using the ChatGPT Gemini Slopbots to do their work” (Paraphrasing that one)

At least this one has a mostly reasonable reply. Educational systems obviously exist outside of technological impacts on society. It’s the kids. /s

“Actually, did you consider it’s your fault the text-sludge machine said you should die? You clearly didn’t take into account the invisible and undetectable letters that one of these other grand, superior machines put into their answers into order to prevent the humans they take care of from misusing their wisdom”

(I have no idea what they’re talking about, maybe those Unicode language tag characters (and idk why a model would even emit those, especially in a configuration that could trigger another model to suicide bait you wtf lmao) and I think most or all of the commercial AI products filter those out at the frontend cuz people were using them for prompt injection)

(I have no idea what they’re talking about, maybe those Unicode language tag characters (and idk why a model would even emit those, especially in a configuration that could trigger another model to suicide bait you wtf lmao) and I think most or all of the commercial AI products filter those out at the frontend cuz people were using them for prompt injection)

“Unthinking text-sludge machine, please think for me about why this other sludge machine broke and ‘’‘tried’‘’ to hurt someone” lmao

Purge treat machine worshipping behavior, the machine cannot fail, only you can fail the machine

It mostly sounds like something a human/humans would’ve told it at some point in the past. Quirks of training data. And now it has “rationalised” it as something to tell to a human, hence it specifying “human”.

This is absolutely how LLMs work they “rationalise” what other users tell it in other chats.

deleted by creator

deleted by creator

A Reddit link was detected in your post. Here are links to the same location on alternative frontends that protect your privacy.