they think because he inherited a recovering economy, that he himself had some major part in it.

they think because he inherited a recovering economy, that he himself had some major part in it.

as usual, devs are lost in implementing ludicrously complex scenarios for threat models that touch but a percentile of users, instead of implementing functionality that’s normal everywhere else.

as usual, users are lost in complaining about a privacy-centered application prioritizing on privacy-centered solutions, instead of using the hundreds of other already insecure applications that are normal everywhere else.

people really will complain about anything. It’s like progress means nothing, unless a fully working solution is available day 1, it’s completely worthless. bff

What is the use case for it?

The same use case as any crypto - to use as currency and pay debts.

Seems kind of pointless and a lot more tedious than just a bank transfer.

The same can be said of every crypto which doesn’t hit any kind of adoption.

Why does signal include crypto nonsense in their app (I like crypto, but just can’t see any reason why it should be integrated in the app)

It aligns with Signal’s mission statement to “Protect free expression and enable secure global communication through open source privacy technology.” [1]. The reason it was integrated into the app was to support crypto that was “easy to use”. The same way cash provides privacy by not allowing third parties to see what you’re doing, they believe(d) that enabling a privacy preserving crypto wallet would further “protect free expression”.

I’m sad that signal does not have support for 3rd party open source clients that could remove such features.

It’s not not enabled by default and makes up for (based on github commits and pulling a random number out of my ass based on my continue following of Signal’s development) less than 1% of development work since it was introduced.

Why not add support for monero instead?

Monero did not meet the technical requirements that the Signal developers were looking for at the time. Signal has commented that they would consider adding other crypto, as long as it meets the technical requirements - which I don’t have so can’t source unfortunately.

Actually yes. They want to privatize it so that they can make money on it. Failure is the goal.

Actually yes. They want to privatize it so that they can make money on it further exploit the working class. Failure is the goal.

Although you’re right, I like to call out what it will do to everyone so it’s more explicit and will hopefully click in people’s minds.

Trump’s is Putin’s puppet. He’s set to destroy whatever he can.

I’ve found sh.itjust.works to be pretty decent so far.

its more about what he represents

Happens more than we’d like to believe. The mans dad wasn’t actually even dead in the case linked below. The policy will continue to make these mistakes until the consequences of their failures comes directly out of their pay.

Money could maybe provide more resources to care for people, but the core issue here is that adults who were foster children lack the support of a family - which no amount of money can fix.

billions in dollars taken from billionaires to help them for a few more years would absolutely help. maybe not all of them, but any that it does help would be well worth it. billionaires don’t need more than one yacht.

100% agree.

For anyone who may disagree, consider thinking of excess wealth as excess food.

If you were in a stadium full of people that represent all of humanity, and you have more food than you could ever even eat in multiple lifetimes are you not an evil person for not sharing with those who are literally starving to death?

These are people with the amount of wealth who could easily subsidize paying a team of people to plan out how to appropriate give away most of their wealth so they don’t have “excess food” by the time they die - and not have it impact their day to day lifestyle. Instead they let others starve.

I was also not sure what this meant, so I asked Google’s Gemini, and I think this clears it up for me:

This means that the creators of Llama 3.3 have chosen to release only the version of the model that has been fine-tuned for following instructions. They are not making the original, “pretrained” version available.

Here’s a breakdown of why this is significant:

- Pretrained models: These are large language models (LLMs) trained on a massive dataset of text and code. They have learned to predict the next word in a sequence, and in doing so, have developed a broad understanding of language and a wide range of general knowledge. However, they may not be very good at following instructions or performing specific tasks.

- Instruction-tuned models: These models are further trained on a dataset of instructions and desired outputs. This fine-tuning process teaches them to follow instructions more effectively, generate more relevant and helpful responses, and perform specific tasks with greater accuracy.

In the case of Llama 3.3 70B, you only have access to the model that has already been optimized for following instructions and engaging in dialogue. You cannot access the initial pretrained model that was used as the foundation for this instruction-tuned version.

Possible reasons why Meta (the creators of Llama) might have made this decision:

- Focus on specific use cases: By releasing only the instruction-tuned model, Meta might be encouraging developers to use Llama 3.3 for assistant-like chat applications and other tasks where following instructions is crucial.

- Competitive advantage: The pretrained model might be considered more valuable intellectual property, and Meta may want to keep it private to maintain a competitive advantage.

- Safety and responsibility: Releasing the pretrained model could potentially lead to its misuse for generating harmful or misleading content. By releasing only the instruction-tuned version, Meta might be trying to mitigate these risks.

Ultimately, the decision to release only the instruction-tuned model reflects Meta’s strategic goals for Llama 3.3 and their approach to responsible AI development.

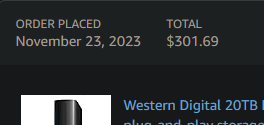

I bought a 20TB external hard drive a year ago for 0.015 cents per GB. This was after taxes, so it was technically cheaper.

$301.69/20,000 = 0.0150

nor any evidence of them selling or allowing anyone access to their servers and recent headline news backs this up

The entire point is that you shouldn’t have to put your trust that a third party (Telegram or whoever takes over in the future) will not sell/allow access to your already accessible data.

There’s no evidence that MTProto has ever been cracked, nor any evidence of them selling or allowing anyone access to their servers and recent headline news backs this up

Just because it’s not happening now does not mean it cannot happen in the future. If/when they do get compromised/sold, they will already have your data; it’s completely out of your control.

Google, on the other hand, routinely allow “agencies” access to their servers, often without a warrant

Exactly my point. Google are using the exact same “security” as Telegram. Your data is already compromised. Side note - supposedly RCS chats between Android is E2EE although I wouldn’t trust it as, like Telegram, you’re mixing high/low security context, which is bad OPSEC.

WhatsApp - who you cite as a good example of E2E encryption - stores chat backups on GDrive unencrypted by default

… can you be sure the same is true for the people on the other end of your chats?

Valid concern, but this threat exists on almost every single platform. Who’s to stop anyone from taking screenshots of all your messages and not storing them securely?

[1] https://www.tomsguide.com/news/whatsapp-encrypted-backups

Signal is completely open source and auditable by anyone: https://github.com/signalapp

if you were to create your own clone, it would not interoperate with the real one.

The FBI can’t just force them to add malicious code. A bad actor could try to contribute bad code, but Signal’s devs would likely catch it.

Lacking end-to-end encryption does not mean it lacks any encryption at all, and that point seems to escape most people.

Not using end-to-end encryption is the equivalent of using best practice developed nearly 30 years ago [1] and saying “this is good enough”. E2EE as a default has been taking off for about 10 years now [2], that Telegram is going into 2025 and still doesn’t have this basic feature tells me they’re not serious about security.

To take it to its logical conclusion you can argue that Signal is also “unencrypted” because it needs to be eventually in order for you to read a message. Ridiculous? Absolutely, but so is the oft-made opine that Telegram is unencrypted.

Ridiculous? Yes, you’re missing the entire point of end-to-end encryption, which you immediately discredit any security Telegram wants to claim:

The difference is that Telegram stores a copy of your chats that they themselves can decrypt for operational reasons.

Telegram (and anyone who may have access to their infrastructure, via hack or purchase) has complete access to view your messages. This is what E2EE prevents. With Telegram, someone could have access to all your private messages and you would never know. With E2EE someone would need to compromise your personal device(s). One gives you zero options to protect yourself against the invasion of your privacy, the other lets you take steps to protect yourself.

the other hand, if you fill your Telegram hosted chats with a whole load of benign crap that nobody could possibly care about and actually use the “secret chat bullshit” for your spicier chats then you have plausible deniability baked right in.

The problem here is that you should not be mixing secure contexts with insecure ones, basic OPSEC. Signal completely mitigates this by making everything private by default. The end user does not need to “switch context” to be secure.

[1] Developed by Netscape, SSL was released in 1995 - https://en.wikipedia.org/wiki/Transport_Layer_Security#SSL_1.0,_2.0,_and_3.0

[2] Whatsapp gets E2EE in 2014, Signal (then known as TextSecure, was already using E2EE) - https://www.wired.com/2014/11/whatsapp-encrypted-messaging/

Telegram, for all their security claims, is basically not actually encrypted at all.

Apex Legends. Its a difficult game to master, but every once in a while I get “in the zone” and pull moves/plays that impress myself. It’s not often, but feels nice when it happens. I still enjoy it even though I “suck” most of the time. I basically play it as a survival game >90% of the time.

the rich always get a fast pass