Shows up like that in the local (non-US) version here too.

And this would have been such an easy way for Google to show a little bit of (at least symbolic) resistance to everything going on…

Shows up like that in the local (non-US) version here too.

And this would have been such an easy way for Google to show a little bit of (at least symbolic) resistance to everything going on…

Maybe your monitor was trying to protect you

Yep, I’m certainly not claiming that Windows is better at it these days… (Possibly unpopular opinion: Windows usability peaked with WinXP.)

Thanks! I uninstalled it and things appear to work normally.

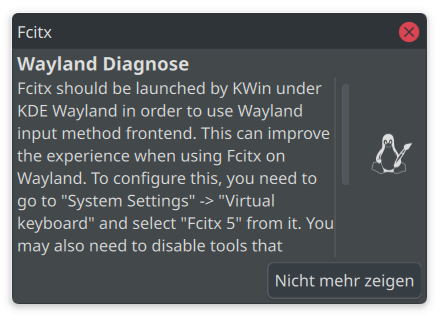

There are days where I think that desktop Linux usability has gotten so good, it has come such a long way since I started using it in the late 90s, and that now it’s really good. And then there are days like today, where I just install some system updates, reboot, and suddenly I’m greeted with:

Note: I have absolutely no idea what “Fcitx” even is. Or why and how it’s launched, or whether I’m actually using it or not. Or what this notification is trying to tell me exactly, and whether it is desirable for me to “improve the experience” with it. Or how the latest updates caused this. It appears that it has something to do with keyboard input, I guess. I assume that I could find out more by crawling the web. But honestly, I’m just too fucking exhausted to even bother figuring it out. I don’t even want to know how much lifetime I’ve already spent chasing Linux problems like that.

I like to use -- in plain text too! LaTeX user high five…?

Although I read somewhere recently that some people consider usage of em-dashes as a sign of AI-generated text. Oh well.

Don’t worry they will use ChatGPT to learn all the COBOL they need.

Oh why would they. They will just rewrite it from scratch in a weekend, right? And reading the original code would only pollute the mind with historic knowledge, and that stands in the way of disruptive innovation.

(btw I appreciate your correctly nested parentheses.)

So after billions of investment, and gigawatt-hours of energy, it’s now “not too bad for throwaway weekend projects”. Wow, great. Let’s fire all the programmers already!

Apart from whatever the fuck that process is, it is not engineering.

And to think that people hated on Visual Basic once… in comparison to this stuff, it was the most solid of solid foundations.

Not clicking those HN links, decided years ago already that site should not be part of my life anymore at all. The few times I have deviated from that rule since, I regretted it.

As for the more general topic, I feel so bad for all trans people with everything that is unfolding. It’s horrible. But be assured that there are many peope in this world who are on your side on this. Wish I could say something more useful, but I’m at a loss of words.

Okay thanks for the heads-up, I will give it a try. The “Note to the reader” it starts with is already pretty wild… (unless that’s just part of the fiction. Edit: I assume it’s part of the fiction)

Edit: okay… a few pages in, I don’t think I can do this… not my thing.

You have my sympathy! Is the worst part that you have to review the slop or its general presence at all?

Asking because at my workplace it will be allowed soon, and some coworkers are unfortunately looking forward to it, and I’m horrified, especially by the thought of having to do code review then…

That is indeed troubling, casts a shadow on Project Gutenberg’s judgement. Now I wonder how long until Wikipedia falls too :( Gosh, I miss being excited about new tech. Now new tech is just making things worse.

About that book, so it is more “good bad” instead of “bad bad”? Maybe I’ll take a look, some light/weird reading might be better than doomscrolling (and these days there’s so much doom to scroll).

I have to keep reminding myself that this is the technology that they all claim will soon do all our work, our arts, our science, everything.

Rituals can be good, but yeah, agile standup meetings are not the good kind. Luckily I don’t have them daily… several times a week is already draining enough. If they were daily, I would just burn out. And the standups are IMO not even the worst part of agile…

deleted by creator

The best solution right now may be “buy a Macbook and learn MacOS”, which is so depressing.

Depends on whether you include “my personal data is sent to the manufacturer of the computer against my wishes” in your threat model… Apple does many good things for security, and I wish PC hardware makers would take security-related things even just nearly as seriously as them. But I can’t trust Apple anymore either.

(Explanation: the whole iCloud syncing stuff is such a buggy mess. I don’t want it, I don’t need it, so I want it off. But I guess Apple just doesn’t test enough how well it works when you turn it off, maybe they can’t imagine someone not wanting it. The problem is, iCloud sync settings don’t stay off. Settings randomly turn themselves back on, e.g. during OS updates, and upload data before you even notice it. I’m not claiming that’s intentional, I assume it’s just bugs. But I’ve observed such bugs again and again in the past 9 years, and I’ve had enough. Still have a Macbook around, but I use it very rarely these days, only when I need some piece of software on MacOS that has no suitable Linux equivalent.)

While a PC+Linux setup can avoid the specific issue of “don’t randomly upload my data somewhere”, the setup of it all can be a mess, as you say. And then security is still limited by buggy hardware and BIOS/firmware that is frequently full of security holes. The state of computers is depressing indeed (in so many ways, security just being one of them)…

Is it too early to hope that this is the beginning of the end of the bubble?

Also, does someone know why broadcom was also hit so hard? Is it because they make various networking-related chips used in datacenter infrastructure?

And people believe this … why?

Maybe people believe that all the AI stuff is just magic [insert sparkle emoji], and that can terminate further thought…

Edit: heh, turns out there’s science about that notion

screaming about “theft!” and “hacking!!”

Sounds plausible. Or maybe they will go with a don’t use it, because privacy! take. Funny thing is, I actually agree people shouldn’t give them their data. But they shouldn’t give it to OpenAI either…

Ugh. With this, and the recent articles about car makers collecting location data, and the multitude of news about car makers integrating LLMs, it seems that cars are going the same way as TVs, i.e. everything on the market is constantly violating privacy while also throwing ads in your face, and there’s no good models left to buy that don’t do it (to my knowledge). Wondering if there will be any good options left when it’s some day time for a new car…