I got a stack of PCS that are very similar if not identical. Third gen i7, 8 gigs of ram, one terabyte hdd, all but one are the same HP model with the same motherboard, etc too. I upgraded the RAM in a few of them, and I have enough spare TB hard drives to put an extra in each. Two have Nvidia GeForce 210 gpus, and the unique one out of the bunch I’ll probably throw in a spare RX 570 I have.

But, what to do with them? Easiest answer is probably sell them all for $75 each but that’s not what we do here, right? Right now I’m assuming they all support w o l and I can easily set up ansible/awx for orchestration. I’m just looking for some fun experiments, projects, or actual uses for this Tower of PC towers

Build a proxmox cluster and teach yourself about high availability services. Tear it down and do it again with xcp-ng. Repeat using more and more complex architectures

Sell them and buy something newer

Seriously though the going rate for old hardware isn’t that different from the newer stuff. Try price matching 3rd gen to 6th or 7th gen.

Make a fort

Since they are old, i would imagine the power efficiency isn’t the best on them for a 24/7 HA cluster at home. Unless you have an abundance of solar power or something. So I would use them as a test branch for whatever I want to do for self-hosting and learning

I would use them as learning platform for myself. Play with Active Directory DCs, replicataion, failover, recovery, networking etc. Just because more practice in that is what would be needed for advancement at work.

Others mentioned Kubernetes and Proxmox clustering. I could also use some sacrificial storage and compute to play around with those technologies so I could improve my self-hosted services.

I’m not sure about anything useful. Best thing would probably be to install Linux and donate them to people in need. For experimentation, sure, set up a Beowulf Cluster, learn FreeBSD, Orchestration, Kubernetes, Ansible… Use them to test your microservice architecture software projects, software-defined networking…

I would look into setting up a proxmox cluster

with high availabilityon them and from there you can look into fun projects that you can run as proxmox vms or lxcs.

https://www.xda-developers.com/proxmox-cluster-guide/

https://pve.proxmox.com/wiki/High_Availabilityedit: HA seems to require a shared disk, such as a SAN or NAS.

You should be able to do HA with ceph, I think. You can do almost-HA with zfs mirrors, where instead of instant failover you only lose data up to the last mirror sync (a few minutes max).

Ceph is great, we run critical infra at work on proxmox with ceph. Very reliable in my experience. It was definitely helpful for me to have ceph experience from my home cluster when starting there.

Ah right, that rings a bell. Proxmox and Ceph sounds like a perfect experiment for OPs hardware. :)

https://pve.proxmox.com/pve-docs/chapter-pveceph.html

Eh… Maybe for learning.

Although they technically support vt-d, performance on 13-year-old machines will be pretty abysmal by today’s standards.

Even first gen i-series Intel CPUs support VT-d. I had an i7-870 that ran my entire setup under Proxmox for several years, until early 2023.

What you really need is RAM. In my case, ~32GB per node in a three-machine cluster is not quite enough, but a 4c/8t CPU is more than plenty.

They are not too terrible really. 3rd gen i7 is the Ivy Bridge generation, so 22 nm. For many homelab server tasks the CPUs would be just fine. Power efficiency is of course worse than modern CPUs, but way better than the previous 32 nm Sandybridge generation. I had such a system with integrated graphics and one SSD and that drew 15 W at idle at the wall.

My first gen i7 would still be going strong if the mobo hadn’t started dying. Especially running Linux.

Yeah, I focused on the I’m just looking for some fun experiments, projects part.

I wouldn’t use the machines for anything other than experimenting for fun, they’re power hungry too if counting per performance.The at load efficency isn’t always the most important metric, depending on what you are using the machines for. If they are mostly idle, efficiency isn’t too bad. Many server tasks don’t load the CPU to the fullest anyway.

That’s true, if there’s no load then the difference isn’t much money.

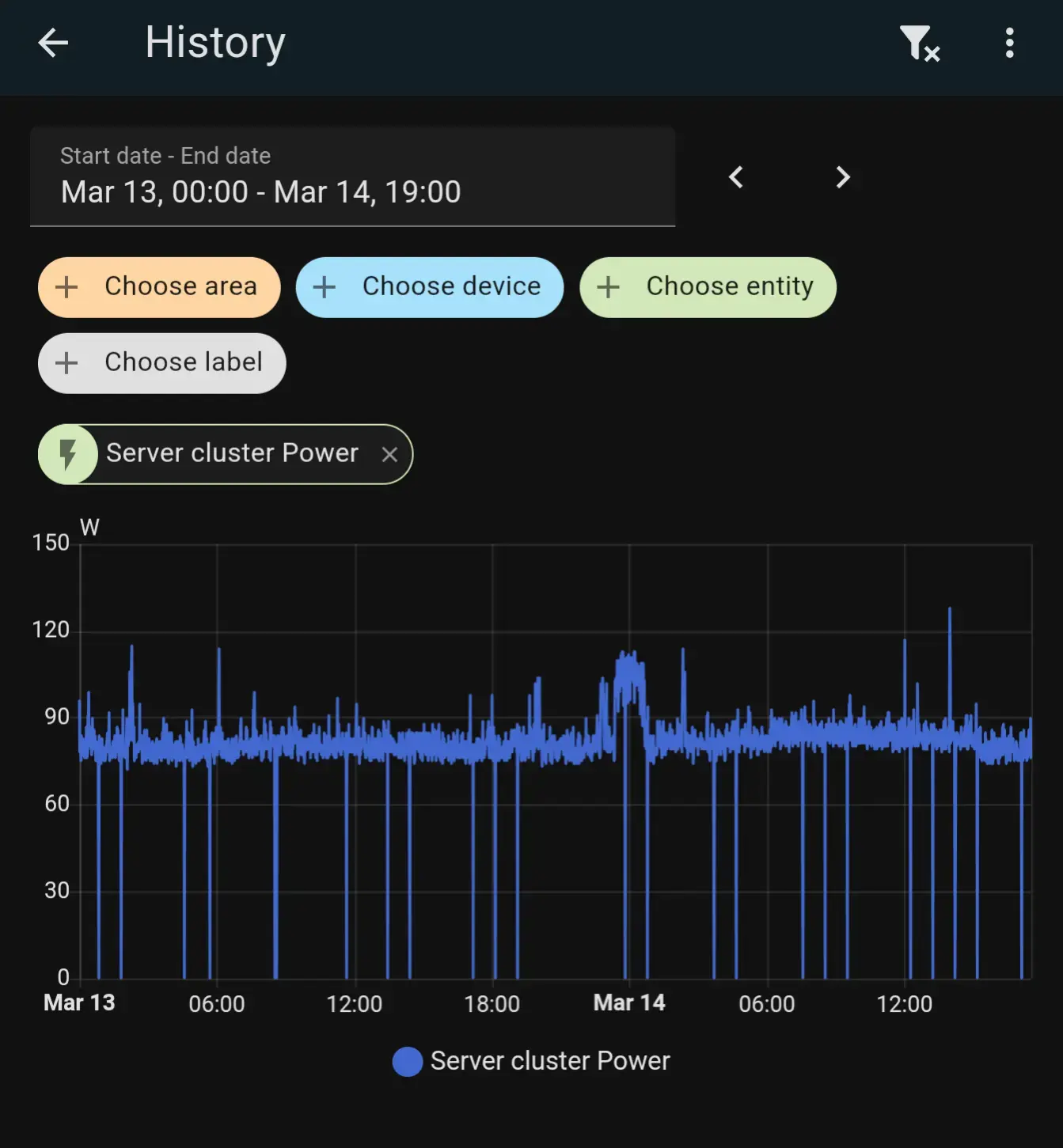

I’m running a NAS, some game servers, a forgejo instance and a jellyfin server and more on my machine so it’s never truly idle and I forgot to think about that metric.Slap a few zigbee smart plugs into your setup, cluster them in Home Assistant, and measure the total power draw. That’s what I do. It’s eye-opening… I learned that my 5800X3D/7900XTX gaming PC is capable of pulling exponentially more power than my entire server cluster. I shut that thing off when I’m not using it now haha.

My server has a gaming vm with gpu passthrough (6650 XT). With my vm powered on and idle the whole server draws about 60w-65w. Monitor not included.

Damn, that’s impressive. My rig idles at ~110W, but I’ve heard that the 5800X3D just…does that. Especially so with the fact that it has AMD’s beefiest GPU.

I would want to do a cluster. Just to learn how that works. But just thinking of the electricity cost, I would personally donate them.

Unless you’re redlining your systems 24/7, the load really shouldn’t be that bad.

That might be the case. But I have done a great job of reducing the power load of my server from 1200 watts down to 65 watts. And I am slowly trying to get the point that I can off load my servers to solar and battery. I live in a place with not so great of sun.

But I realize I didn’t include that in the original post. So, fair point and thanks for the info!

I run 3x 7th gen Intel mini PCs in a Proxmox cluster, plus a 2014 Mac mini on a 4th gen i5 attached to 3x 4TB drives in RAID5 (my NAS), plus an 8TB backup drive. I also run Home Assistant on a Lenovo M710q Tiny (separate because I use Zigbee and don’t wanna deal with USB passthrough and migrating VMs and containers…). Total average draw is ~100W.

Back in the day, I set up a little cluster to run compute jobs. Configured some spare boxes to netboot off the head-node, figured out PBS (dunno what the trendy scheduler is these days), etc. Worked well enough for my use case - a bunch of individually light simulations with a wide array of starting conditions - and I didn’t even have to have HDs for every system.

These days, with some smart switches, you could probably work up a system to power nodes on/off based on the scheduler demand.

Proxmox cluster

Perhaps a good time to mention I have several raspberry pis I could add to the mix.

Cluster them and do something funny.

Refurb all, sell all but three, set up a cluster. Then when you’re satisfied, sell those three and use all the money to buy one or two systems with modern hardware.