My math degree is only a handful of years old but I don’t do math anymore so I have no clue how horrified I should be

Congrats on finishing your degree

As far as I can tell, every step but the last one is wrong.

If anyone’s curious, the first step is wrong. e^x minus its integral is a constant. Not necessarily zero.

Ok. Take the derivative of both sides and integrate at the end, then plug in a value of x to get C = 0.

In step two you can’t gake out e^x from the integral because is being integrated by the x variable.

behold! you have found ze joke

Except the first assumption that e^x = its own integral, everything else actually makes sense (except the DX are in the wrong powers). You simply treat the “1” and “integral dx” as operators, formally functions from R^R into R^R and “(0)” as calculating the value of the operator on a constant-valued function 0. EDIT: the step 1/(1-integral) = the limit of a certain series is slightly dubious, but I believe it can be formally proven as well. EDIT 2: I was proven wrong, read the comments

That’s the thing about physicists doing math. They know the universe already works. So if they break some math on the way to an explanation, so what? You can fix math. They care about the universe. It’s pretty cool sometimes. Like bra-ket notation is really an expression the linear algebra concepts of dual space and adjoints. But to a physicist, it’s just how the math should work if it is to do anything useful.

So yeah, this post looks like nonsense. Because it is. But there is a lesson that “math” should work like this, and there is utility in pushing the limits. No pun intended.

Edit: I’m not claiming this is a useful application. It’s circular reasoning as this post’s parent alludes to.

is it immediately obvious that the inverse of the operator L is 1/L though? Much less the series expansion for the operator…

If you try to fill in the technical details it will be a lot of work compared to a simpler calculus based alternative

But then again some Mathematicians spent the better half of the century formalizing the mathematics used by Physicists like Dirac (spoiler: they all turned out to be valid)

After careful consideration I have come to the conclusion that the inverse of the operator L is obviously not 1/L and you are absolutely right. This derivation is complete nonsense, my apologies. In fact no such inverse can even exist for the operator 1 - integral, as this function is not an injection.

What is meant here, I believe, is (1 - Int)^-1. Writing 1/(1 - Int) is an abuse of notation, especially when the numerator isn’t just 1 but another operator, which loses the distinction between a left and a right inverse. But for a bounded linear operator on a normed vector space, and I think Int over an appropriately chosen space of functions qualifies, (1 - Int)^-1 equals the Neumann series \sum_k=0^∞ Int^k, exactly as in the derivation.

Int is injective: Take Int f = Int g, apply the derivative, and the fundamental theorem gives you f = g. I think you can make it bijective by working with equivalence classes of functions that differ only by a constant.

Int is definitely not injective when you consider noncontinuous functions (such as f(X)={1 iff X=0, else 0}). If you consider only continuous functions, then unfortunately 1-Int is also not injective. Consider for example e^x and 2e^x. Unfortunately your idea with equivalence classes also fails, as for L = 1 - Int, L(f) = L(g) implies only that L(f-g) = 0, so for f(X)=X and g(X)=X + e^x L(f) = L(g)

Sets of measure zero are unfair. But you’re right, the second line in the image is basically an eigenvector equation for Int and eigenvalue 1, where the whole point is that there is a subspace that is mapped to zero by the operator.

I’m still curious if one could make this work. This looks similar to problems encountered in perturbation theory, when you look for eigenvectors of an operator related to one where you have the spectrum.

Well, sets of measure 0 are one of the fundamentals of the whole integration theory, so it is always wise to pay particular attention to their behaviour under certain transformations. The whole 1 + int + int^2 + … series intuitively really seems to work as an inverse of 1 - int over a special subspace of R^R functions, I think a good choice would be a space of polynomials over e^x and X (to leave no ambiguity: R[X, e^X]). It is all we need to prove this theorem, and these operators behave much more predictably in it. It would be nice to find a formal definition for the convergence of the series, but I can’t think of any metric that would scratch that itch.

If you “fill in” the indefinite integral with a (definite integral with) bounds -infinity to x, then I think the first step works. I’m not 100% how to deal with the 1/(1-integral) step, but my guess would be to transform to the Laplace domain because Laplace transform analysis is “aware of” convergence issues, i.e. the region of convergence “pops out” when calculating the transform, or it’s present in the lookup table entry.

Yeah I don’t remember if the result is correct, but the process is definitely sus.

the result is correct

I don’t believe you can just factor our e^x in the second step like that. That seems in incorrect to me. Then again I’ve been out of calculus for years now.

The process is horribly wrong, but the answer is correct. I think that’s the intended joke.

thanks, that’s what I meant

Why’d the (0) vanish? Everything else seems “justified”.

If we already regularly ignore infinities in the self-energy of electrons and other divergent stuff what’s a little division by zero here or there?

I think this can be translated into something not completely wrong. I.e. I have seen calculus like this in old-school books that use operational calculus. It usually uses differential operators instead of integrals, although antiderivatives get operational formulas in terms of the differential operator, and it leads to the Laplace transform because it exhibits identical operational properties.

You have no idea. This is tame compared to some of the shit I seen in my masters.

I remember one time we had an exercise where the calculation yielded a violently nonconvergent improper integral.

Now, we had already been introduced to some “tricks” to “deal with” those. And I do mean “tricks” as in magic. Arcane spells best described as “praying to the obscure gods of Borel summation until some incantation accidentally summoned untold horrors that would swallow infinities into fractional dimensions converging to zero” or some bullshit like that.

But this one was so bizarre that the whole class was stumped. Our runes were powerless, what remained of our sanities was fraying and disappearing into the abyss that kept lookingback for more.

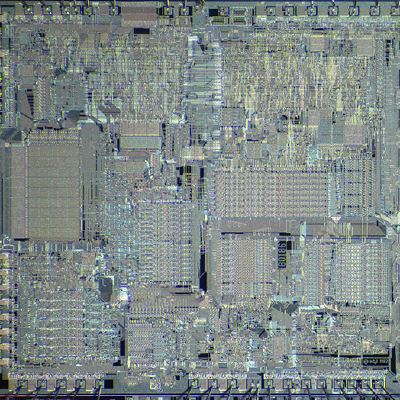

Then the TA just tsk’d at our collective weakness, deadass looked into each of our eyeballs and wrote this on the blackboard

I almost puked on the spot

Aside from the interchange of limits, which remains unjustified, I see no issue

deleted by creator

So in physics, the notation $\int \dd{x} f(x) \triangleq \int f(x) \dd{x} $, i.e. the differential is allowed to precede the rest of the integrand. This is because you can end up with some absolutely disgusting integrand which is a complicated function of several parameters in addition to the variable of integration. The idea is, when reading from left to right, to establish which variable is the integration variable as soon as possible.

Well, that is not quite what it says. Stuff like $\int \dd x \exp{x}$ is quite common, meaning ‘the operation of integration with respect to x is applied to the function e^x’. The first step is quite alright, because you should not read the the juxtaposition of the end of the bracket and the function on the second line as ordinary multiplication, but rather some operation being applied to the function. I say this as a physicist, mathematicians probably wants to find me and have me killed.