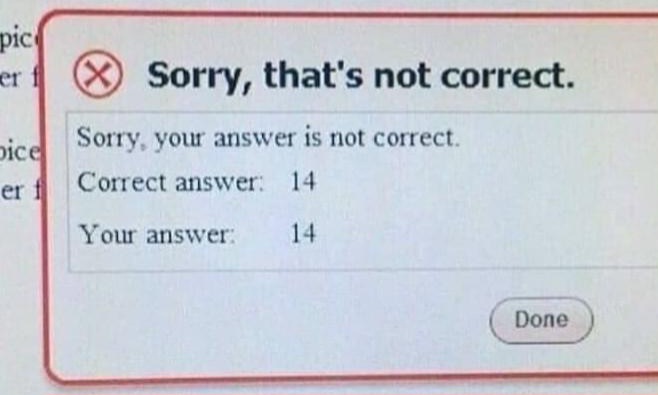

"English-learning students’ scores on a state test designed to measure their mastery of the language fell sharply and have stayed low since 2018 — a drop that bilingual educators say might have less to do with students’ skills and more with sweeping design changes and the automated computer scoring system that were introduced that year.

English learners who used to speak to a teacher at their school as part of the Texas English Language Proficiency Assessment System now sit in front of a computer and respond to prompts through a microphone. The Texas Education Agency uses software programmed to recognize and evaluate students’ speech.

Students’ scores dropped after the new test was introduced, a Texas Tribune analysis shows. In the previous four years, about half of all students in grades 4-12 who took the test got the highest score on the test’s speaking portion, which was required to be considered fully fluent in English. Since 2018, only about 10% of test takers have gotten the top score in speaking each year."

I found during my language learning journey that talking to people was infinitely better than duolingo or any other automated learning process. Robots get it wrong when you try to say something and it’s a little non-standard. Humans will usually understand you even if you’re not 100% perfect. Texas is doing those kids a disservice.

Education software sold to schools is dogshit for the most part, and that is when using it as a native English speaker. I can’t imagine how horrible it would be to try and use something made for non-English speakers.

I thought this was a joke before having to help my kiddo when they were doing remote schoolwork during covid.

That’s the point. They want to keep non-native speakers in poverty and working entry-level labor jobs.

My partner is ESL (English second language). She sometimes repeats a word that I say to practice the pronunciation. Yesterday, it was “binoculars,” and I explained the root of “bi,” two, and “ocular,” as in the eyes. That’s not happening with a computer program that can’t discern a human’s degree of understanding.

Texas doing kids a disservice is what they do best.

I think it was just the testing being done by computer, as opposed to a teacher evaluation test. In other words, the teachers can’t claim a student is good, even if they aren’t.

The teaching was the same, but now the testing became impartial.

I’d think you could fix that by recording the test and flagging scores below a certain level for human review.

FTA: This year, the TEA said that at least 25% of the TELPAS writing and speaking assessments were re-routed to a human scorer to check the program’s work. That number oscillated between 17% and 23% in the previous six years, according to public records obtained by the Tribune.

No shit, a sharp drop suddenly with a new test procedure… How could you even start to think it is anything but the test? Any why not run the 2 tests in parallel to actually make them comparable to begin with? Who are the idiots doing such things? Ask GPT how to do that next time, it can’t do worse than this.

Teachers tend to just pass students through. It makes the teachers look better if their students are doing well. The new testing involved is that it became impartial, so teachers evaluating can no longer claim words that the students butchered was correct.

I suspect that the human graders were the biased ones, and that this automated test is more accurate. Schools frequently inflate test results when given the opportunity (especially when low results reflect poorly on the school).

How do students known to be fluent in English do on it? Do they pass reliably?

Edit: Here’s a discussion of a similar phenomenon in the context of high-school graduation rates. Graduation rates regularly go up by a very large amount when standardized tests stop being required, but that’s not because otherwise-qualified students were doing poorly on standardized tests.

It’s possible for both things to be true. Human reviewers might be biased towards awarding higher scores and the computer could be dog shit at scoring. I have no idea how this can meaningfully be grading fluency. Fluency in a spoken language consists of vocabulary, grammar, and pronunciation.

I have seen plenty of people who were very fluent who speak with an extremely noticeable accent who were none the less comprehensible. Software is extremely likely to perform poorly at recognizing speak by non-native speakers and fail individuals who are otherwise comprehensible. Because it wont even recognize the words its nearly entirely testing pronunciation and then denying such students access to electives that would allow them to further their education.

It’s quite possible that you’re right. I haven’t been able to find any research that attempts to quantify how accurate the software is, and without that I can only speculate.

If I understand the article correctly, the system is doing some kind of AI speech recognition to score how people speak. It’s not a natural environment for people to talk to a computer, and could easily be biased by accents. I doubt any automated scoring that isn’t just multiple choice is accurate.

According to my own experience as a fluent English speaker who has a strong accent, modern voice-recognition systems have no problem with my accent, but I agree that they have flaws. They’re not perfect, but I expect that they’re more accurate than teachers because teachers have motives other than accuracy.

Several districts have sued TEA to block the release of the last two years of ratings, arguing that recent changes to the metrics made it harder to get a good rating and could make them more susceptible to state intervention.

My wife and her family have a hell of a time getting Google to understand their requests (Hispanic, wife is first generation) and has no issue understanding my requests, so I could see significant issues with the software misinterpreting.

Interesting. A few people have told me that I enunciate more clearly than a native speaker, so if that’s the case then my experience with speech-recognition systems will not be representative. With that said, older speech recognition systems did have trouble understanding me whereas newer ones don’t so I think there really has been improvement.

I tried to find data about how students fluent in English do on this test but I wasn’t able to. Comparing native English speakers to native Spanish speakers who have already learned English would be informative.

Huh. My wife’s Filipino accent is pretty heavy and Google almost always understands her.