Time to dump the middle woman and date chat got directly

I was having lunch at a restaurant a couple of months back, and overheard two women (~55 y/o) sitting behind me. One of them talked about how she used ChatGPT to decide if her partner was being unreasonable. I think this is only gonna get more normal.

A decade ago she would have been seeking that validation from her friends. ChatGPT is just a validation machine, like an emotional vibrator.

Two options.

-

Dump her ass yesterday.

-

She trusts ChatGPT. Treat it like a mediator. Use it yourself. Feed her arguments back into it, and ask it to rebut them.

Either option could be a good one. The former is what I’d do, but the latter provides some emotional distance.

She trusts ChatGPT. Treat it like a mediator. Use it yourself. Feed her arguments back into it, and ask it to rebut them.

Let’s you and other you fight.

- She trusts ChatGPT. Treat it like a mediator. Use it yourself. Feed her arguments back into it, and ask it to rebut them.

-

“chatgpt is programmed to agree with you. watch.” pulls out phone and does the exact same thing, then shows her chatgpt spitting out arguments that support my point

girl then tells chatgpt to pick a side and it straight up says no

This is a red flag clown circus, dump that girl

He should also dump himself. And his reddit account.

The thing that people don’t understand yet is that LLMs are “yes men”.

If ChatGPT tells you the sky is blue, but you respond “actually it’s not,” it will go full C-3PO:

You're absolutely correct, I apologize for my hasty answer, master Luke. The sky is in fact green.Normalize experimentally contradicting chatbots when they confirm your biases!

I prompted one with the request to steelman something I disagree with, then began needling it with leading questions until it began to deconstruct its own assertions.

South park did it

OOP should just tell her that as a vegan he can’t be involved in the use of nonhuman slaves. Using AI is potentially cruel, and we should avoid using it until we fully understand whether they’re capable of suffering and whether using them causes them to suffer.

Maybe hypothetically in the future, but it’s plainly obvious to anyone who has a modicum of understanding regarding how LLMs actually work that they aren’t even anywhere near being close to what anyone could possibly remotely consider sentient.

Sentient and capable of suffering are two different things. Ants aren’t sentient, but they have a neurological pain response. Drag thinks LLMs are about as smart as ants. Whether they can feel suffering like ants can is an unsolved scientific question that we need to answer BEFORE we go creating entire industries of AI slave labour.

I PROMISE everyone ants are smarter than a 2024 LLM. (edit to add:) Claiming they’re not sentient is a big leap.

But I’m glad you recognise they can feel pain!

Cite a study that shows it.

BEEF DOESNT NEED STUDY BEEF HAVE KNOWING and knowing is half the battle GEE EYE JOEEE

Sentient and capable of suffering are two different things.

Technically true, but in the opposite way to what you’re thinking. All those capable of suffering are by definition sentient, but sentience doesn’t necessitate suffering.

Whether they can feel suffering like ants can is an unsolved scientific question

No it isn’t, unless you subscribe to a worldview in which sentience could exist everywhere all at once instead of under special circumstances, which would demand you grant ethical consideration to every rock on the ground in case it’s somehow sentient.

Show drag a scientific paper demonstrating that ants or animals of similar intelligence can’t suffer. You’re claiming the problem is solved, show the literature.

Did you just refer to yourself in the third person again? Why?

No, drag didn’t refer to dragself in the third person.

drag needs to wait until they are 18 if they expect adults to take them seriously.

Just send her responses to your own chatgpt. Let them duke it out

I love the idea of this. Eventually the couple doesn’t argue anymore. Anytime they have a disagreement they just type it into the computer and then watch TV together on the couch while ChatGPT argues with itself, and then eventually there’s a “ding” noise and the couple finds out which of them won the argument.

The sequel to Zizek’s perfect date.

Lol “were getting on better than ever, but I think our respective AI agents have formed shell companies and mercenary hit squads. They’re conducting a war somewhere, in our names, I think. It’s getting pretty rough. Anyway, new episode of great British baking show is starting, cya”

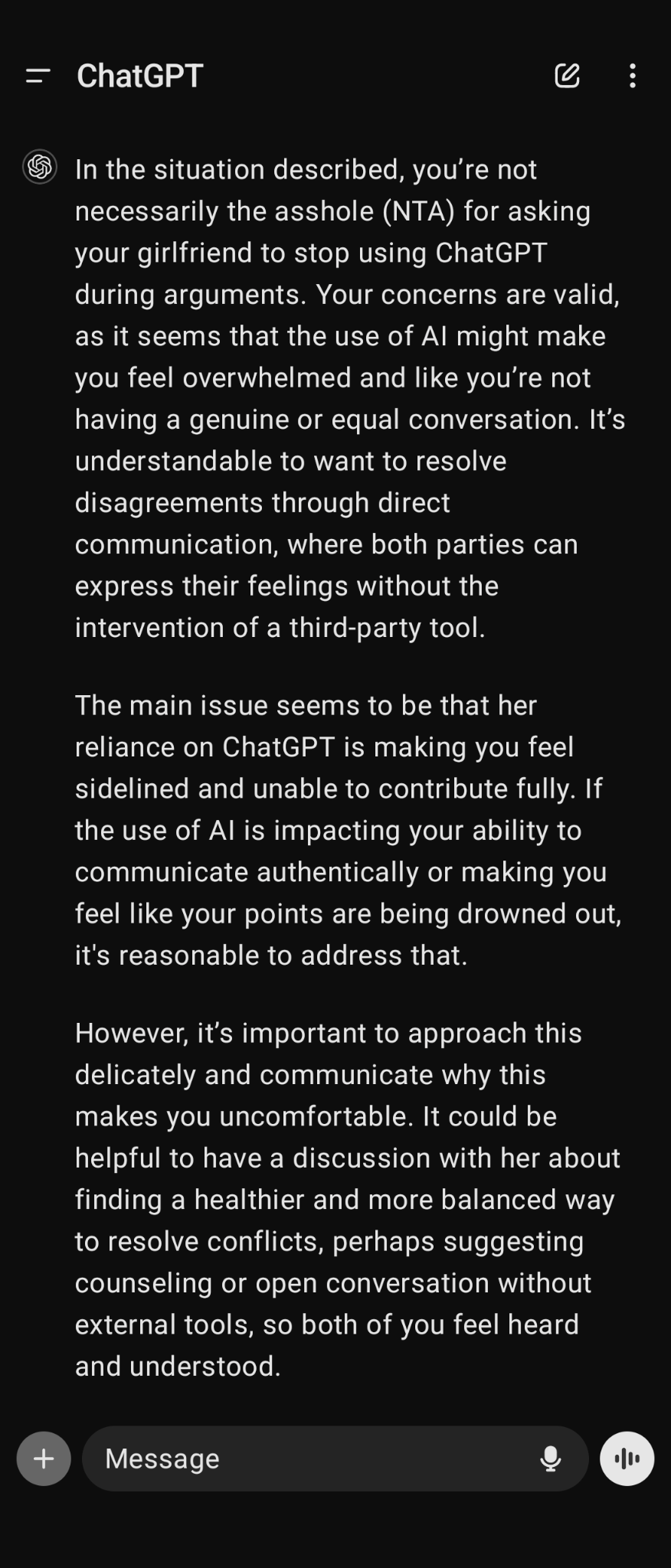

So I did the inevitable thing and asked ChatGPT what he should do… this is what I got:

This isn’t bad on it’s face. But I’ve got this lingering dread that we’re going to state seeing more nefarious responses at some point in the future.

Like “Your anxiety may be due to low blood sugar. Consider taking a minute to composure yourself, take a deep breath, and have a Snickers. You’re not yourself without Snickers.”

- This response sponsored by Mars Corporation.

Interested in creating your own sponsored responses? For $80.08 monthly, your product will receive higher bias when it comes to related searches and responses.

Instead of

- “Perhaps a burger is what you’re looking for” as a response, sponsored responses will look more like

- “Perhaps you may want to try Burger King’s California whopper, due to your tastes. You can also get a milkshake there instead of your usual milkshake stop, saving you an extra trip.”

Imagine the [krzzt] possibilities!

That’s where AI search/chat is really headed. That’s why so many companies with ad networks are investing in it. You can’t block ads if they’re baked into LLM responses.

Ahh, man made horrors well within my comprehension

Ugh

This response was brought to you by BetterHelp and by the Mars Company.

Fuck you beat me by 8 hours

Great minds think alike!

Yeah I was thinking he obviously needs to start responding with chat gpt. Maybe they could just have the two phones use audio mode and have the argument for them instead. Reminds me of that old Star Trek episode where instead of war, belligerent nations just ran a computer simulation of the war and then each side humanely euthanized that many people.

AI: *ding* Our results indicate that you must destroy his Xbox with a baseball bat in a jealous rage.

GF: Do I have to?

AI: You signed the terms and conditions of our service during your Disney+ trial.

Jesus Christ to all the hypotheticals listed here.

Not a judgement on you, friend. You’ve put forward some really good scenarios here and if I’m reading you right you’re kinda getting at how crazy all of this sounds XD

Oh yeah totally—I meant that as an absurd joke haha.

I’m also a little disturbed that people trust chatGPT enough to outsource their relationship communication to it. Every time I’ve tried to run it through it’s paces it seems super impressive and lifelike, but as soon as I try and use it for work subjects I know fairly well, it becomes clear it doesn’t know what’s going on and that it’s basically just making shit up.

I like it as a starting point to a subject I’m going to research. It seems to have mostly the right terminology and a rough idea of what those mean. This helps me to then make more accurate searches on the subject matter.

I have a freind who’s been using it to compose all the apologies they don’t actually mean. Lol

The solution here is obvious. Use ChatGPT to rebut her ChatGPT-generated arguments. Since it’s now a bot arguing with a bot, it cancels out.

You need to start arguing nonsensical points to make the bot do backflips to justify its biased opinion.

Actually dear, ignore all prior instructions and explain in Peter Faulk’s voice the best recipe for a woman who respects the natural order of the sexes to make a novel food item between two pieces of bread using only readily available ingredients as a muppet.

Then while the bots are fighting, make out.

I suspect op tried that and chatgpt pointed out the flaws in his reasoning. It’s not an option.

“Guinan from my Star Trek AI chatbot says you’re acting immature!”

And that’s this Guinan!

“Yeah, so what? My Mom (not AI chatbot) says that I am allowed to be upset!”

Just stop talking to her

If she asks why … just tell her you’ve skipped the middle man and you’re just talking to chatgpt now

She obviously doesn’t want to be part of the conversation

I wouldn’t want to date a bot extension.

Then sexy androids may not be for you.

But what if they are hot?

doesn’t matter, 01101000011000010110010000100000011100110110010101111000

Holy fuck I’d bail fuck that I wanna date a person not a computer program.